COMMON-AI-VERSE

COMMON-AI-VERSE

COMMON-AI-VERSE is configured as an empathetic interface that captures interpersonal relationships within a group of humans and relates them to a series of intelligent agents. These agents react to the gestures, behaviors, and communications among the group members.

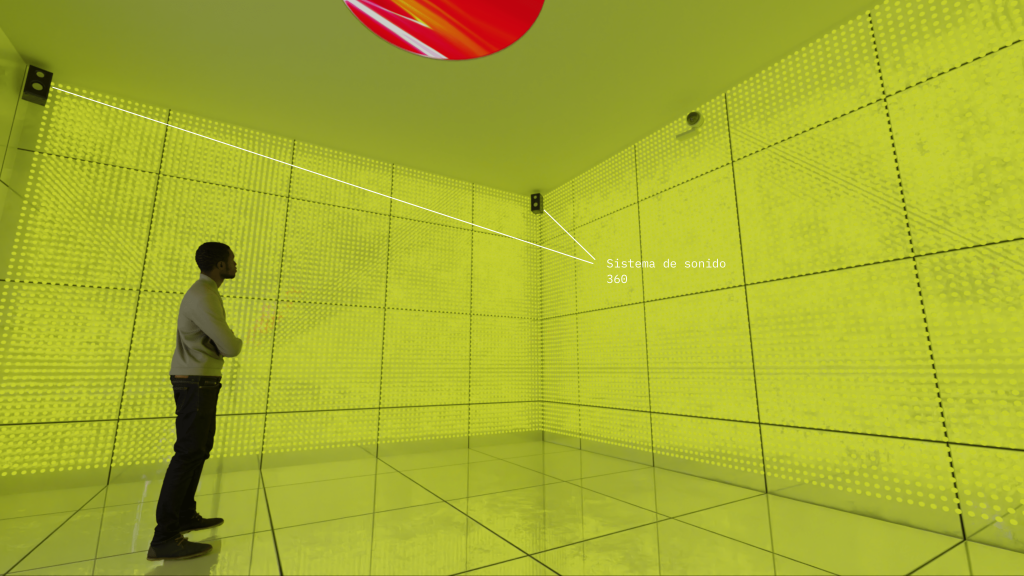

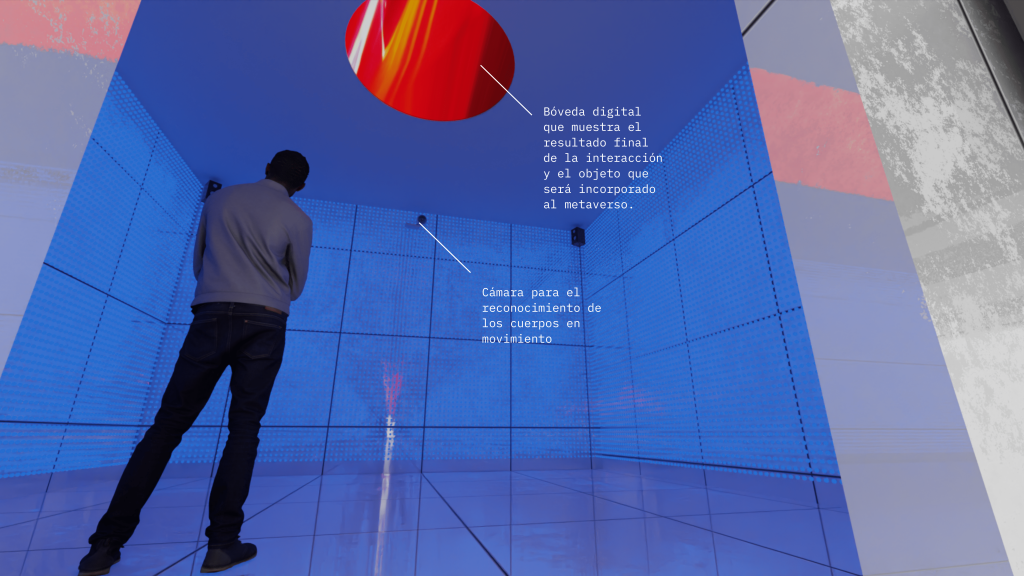

These interactions, collectively and individually collected through a system of cameras equipped with artificial intelligence, serve as input to help these agents become more complex, simpler, integrated, or excluded. The installation also includes an array of RGB LEDs, a surround sound system, and a vault-like screen, which visually and audibly responds to these inputs, becoming a new member of the participating human group. It proposes new atmospheres, reinforces certain gestures, and accompanies the established relationships among the group members in a sympathetic manner.

In this way, the general concept of the installation is to welcome and establish a symbolic relationship between humans and a series of intelligent entities that learn from the group, striving to integrate organically and propose behavioral improvements according to their criteria.

The installation also offers a positive outlook on the relevance and necessity of collective work to harmonize with artificial intelligence, highlighting this group perspective in contrast to the individualistic trend prevalent in recent years.

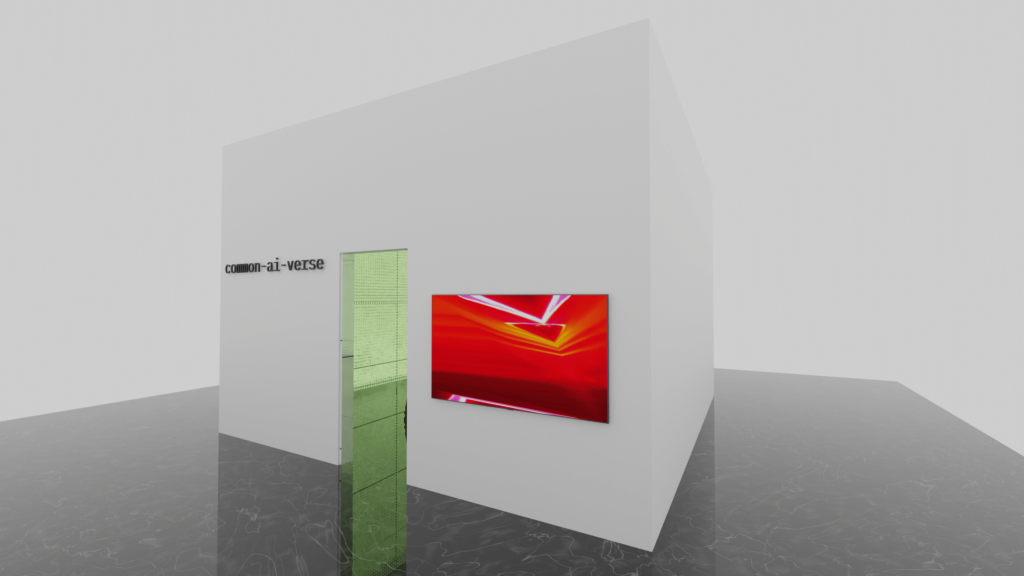

All the data is collected and compressed into a 3D object, which serves as a temporal log and becomes part of a digital history in the metaverse.

Artistic vision

Our artistic concept for this project centers around discovering fresh and inventive methods for interacting with and developing an AI system. This entails incorporating human senses as inputs for the AI system, in addition to conventional device inputs. We are also exploring alternative input values, such as temperature, weight, fingerprints, and facial recognition. The ultimate objective is to generate an AI system that can be viewed as a “live creation” and that can be perceived through various mediums. We are also researching alternative methods for storing data in the AI system’s database, including non-integer and non-verbal input values.

The installation is a hybrid of both virtual and physical elements, with inputs, reactions, and visualizations incorporated between the physical and virtual worlds. Our goal is to create an entirely new and unique experience that challenges the traditional boundaries of the artistic and technological fields.

Interaction Flow

STEP 1:

- Participant recognition

- Using the Open Source library with AI, Pose Net, we recognize and assign an ID to the participants.

- Maximum of 4 participants.

STEP 2:

- The main intelligent agent starts analyzing the relationships between the participants:

- Distances between them

- Physical contacts

- Facing positions

- Movements in space

- Individual micro-gestures

- Interaction times

- Conversations

- Audiovisual reinforcement of actions.

- Visual and sound effects with physical contact between participants

- Visual and sound effects with proximity or distance. Metaphor of frequency waves, where greater distance means lower intensity.

STEP 3:

- Sympathetic response of the system, attending to abstract and concrete visual effects represented in the LED matrix projected against the wall.

- “Cold and warm” sounds depending on the system’s analysis.

- Lighting and visual effects “intense, calm, cold, or warm” according to the analysis.

- Seeking energetic harmony with the group. Integration of the machine as a member.

STEP 4:

- Empathetic proposal for behavior change

- The system suggests a new atmosphere to which the members must now adapt their behaviors.

- The system continues to read and reinforce the intended actions.

STEP 5:

- Display of results in the digital vault

- Uploading a text file for the online 3D model update that represents the data.

- Generation of a QR code to view and download the result.

STEP 6:

- Exit from the installation and waiting for the next group.

Exhibitions

- CCCB Artificial Intelligence October 18th 2023- May 14th 2024

Website

CREDITS

Solimán López (technical and artistic direction), Kris Pilcher (Visual Interface), Esen Ka (AI data visualization and connections), Mohsen Hazrati (Metaverse visualization), Alejandro Martín (curator/project manager), Dr. Holger Sprengel (Executive Producer), Marc Gálvez (Production Assistant).

Solution and integration of Artificial Intelligence: IIIA – CSIC Barcelona with Jordi Sabater, Joan Jené, Cristian Cozar, and Lissette Lemus.

Produced by ESPRONCEDA – Institute of Art & Culture with the support of the Department of Culture – Generalitat de Catalunya (OSIC).